Apache Spark,一个开源的分布式计算系统,已经彻底改变了大数据处理和分析的世界。它提供闪电般的数据处理功能,使其成为数据工程师和数据科学家的首选。

在 Fedora 38 上安装 Apache Spark

第 1 步。在 Fedora 38 上安装 Apache Spark 之前,重要的是要确保我们的系统是最新的软件包。这将确保我们可以访问最新的功能和错误修复,并且我们可以毫无问题地安装 Apache Spark:

<span class="pln">sudo dnf update</span>

第 2 步。安装爪哇。

Apache Spark依靠Java开发工具包(JDK)来实现其功能。要安装 OpenJDK 11,请执行以下命令:

<span class="pln">sudo dnf install java</span><span class="pun">-</span><span class="lit">11</span><span class="pun">-</span><span class="pln">openjdk</span>

现在,通过检查 Java 版本来验证安装:

<span class="pln">java </span><span class="pun">-</span><span class="pln">version</span>

第 3 步。在 Fedora 38 上安装 Apache Spark。

访问 Apache Spark 官方网站并选择最适合您要求的 Spark 版本。对于大多数用户来说,Hadoop的预构建版本是合适的:

<span class="pln">wget https</span><span class="pun">:</span><span class="com">//www.apache.org/dyn/closer.lua/spark/spark-3.5.0/spark-3.5.0-bin-hadoop3.tgz</span>

After downloading Spark, extract the archive using the following command:

<span class="pln">tar </span><span class="pun">-</span><span class="pln">xvf spark</span><span class="pun">-</span><span class="lit">3.5</span><span class="pun">.</span><span class="lit">0</span><span class="pun">-</span><span class="pln">bin</span><span class="pun">-</span><span class="pln">hadoop3</span><span class="pun">.</span><span class="pln">tgz</span>

接下来,将解压缩的目录移动到该目录:/opt

<span class="pln">mv spark</span><span class="pun">-</span><span class="lit">3.5</span><span class="pun">.</span><span class="lit">0</span><span class="pun">-</span><span class="pln">bin</span><span class="pun">-</span><span class="pln">hadoop3 </span><span class="pun">/</span><span class="pln">opt</span><span class="pun">/</span><span class="pln">spark</span>

然后,添加一个用户来运行 Spark,然后设置 Spark 目录的所有权:

<span class="pln">useradd spark chown </span><span class="pun">-</span><span class="pln">R spark</span><span class="pun">:</span><span class="pln">spark </span><span class="pun">/</span><span class="pln">opt</span><span class="pun">/</span><span class="pln">spark</span>

第 4 步。创建系统服务。

现在我们创建一个服务文件来管理 Spark master 服务:systemd

<span class="pln">nano </span><span class="pun">/</span><span class="pln">etc</span><span class="pun">/</span><span class="pln">systemd</span><span class="pun">/</span><span class="pln">system</span><span class="pun">/</span><span class="pln">spark</span><span class="pun">-</span><span class="pln">master</span><span class="pun">.</span><span class="pln">service</span>

添加以下文件:

<span class="pun">[</span><span class="typ">Unit</span><span class="pun">]</span> <span class="typ">Description</span><span class="pun">=</span><span class="typ">Apache</span> <span class="typ">Spark</span> <span class="typ">Master</span> <span class="typ">After</span><span class="pun">=</span><span class="pln">network</span><span class="pun">.</span><span class="pln">target </span><span class="pun">[</span><span class="typ">Service</span><span class="pun">]</span> <span class="typ">Type</span><span class="pun">=</span><span class="pln">forking </span><span class="typ">User</span><span class="pun">=</span><span class="pln">spark </span><span class="typ">Group</span><span class="pun">=</span><span class="pln">spark </span><span class="typ">ExecStart</span><span class="pun">=</span><span class="str">/opt/</span><span class="pln">spark</span><span class="pun">/</span><span class="pln">sbin</span><span class="pun">/</span><span class="pln">start</span><span class="pun">-</span><span class="pln">master</span><span class="pun">.</span><span class="pln">sh </span><span class="typ">ExecStop</span><span class="pun">=</span><span class="str">/opt/</span><span class="pln">spark</span><span class="pun">/</span><span class="pln">sbin</span><span class="pun">/</span><span class="pln">stop</span><span class="pun">-</span><span class="pln">master</span><span class="pun">.</span><span class="pln">sh </span><span class="pun">[</span><span class="typ">Install</span><span class="pun">]</span> <span class="typ">WantedBy</span><span class="pun">=</span><span class="pln">multi</span><span class="pun">-</span><span class="pln">user</span><span class="pun">.</span><span class="pln">target</span>

保存并关闭文件,然后为 Spark 从属服务器创建一个服务文件:

<span class="pln">nano </span><span class="pun">/</span><span class="pln">etc</span><span class="pun">/</span><span class="pln">systemd</span><span class="pun">/</span><span class="pln">system</span><span class="pun">/</span><span class="pln">spark</span><span class="pun">-</span><span class="pln">slave</span><span class="pun">.</span><span class="pln">service</span>

添加以下配置。

<span class="pun">[</span><span class="typ">Unit</span><span class="pun">]</span> <span class="typ">Description</span><span class="pun">=</span><span class="typ">Apache</span> <span class="typ">Spark</span> <span class="typ">Slave</span> <span class="typ">After</span><span class="pun">=</span><span class="pln">network</span><span class="pun">.</span><span class="pln">target </span><span class="pun">[</span><span class="typ">Service</span><span class="pun">]</span> <span class="typ">Type</span><span class="pun">=</span><span class="pln">forking </span><span class="typ">User</span><span class="pun">=</span><span class="pln">spark </span><span class="typ">Group</span><span class="pun">=</span><span class="pln">spark </span><span class="typ">ExecStart</span><span class="pun">=</span><span class="str">/opt/</span><span class="pln">spark</span><span class="pun">/</span><span class="pln">sbin</span><span class="pun">/</span><span class="pln">start</span><span class="pun">-</span><span class="pln">slave</span><span class="pun">.</span><span class="pln">sh spark</span><span class="pun">:</span><span class="com">//your-IP-server:7077</span> <span class="typ">ExecStop</span><span class="pun">=</span><span class="str">/opt/</span><span class="pln">spark</span><span class="pun">/</span><span class="pln">sbin</span><span class="pun">/</span><span class="pln">stop</span><span class="pun">-</span><span class="pln">slave</span><span class="pun">.</span><span class="pln">sh </span><span class="pun">[</span><span class="typ">Install</span><span class="pun">]</span> <span class="typ">WantedBy</span><span class="pun">=</span><span class="pln">multi</span><span class="pun">-</span><span class="pln">user</span><span class="pun">.</span><span class="pln">target</span>

保存并关闭文件,然后重新加载守护程序。systemd

<span class="pln">sudo systemctl daemon</span><span class="pun">-</span><span class="pln">reload sudo systemctl start spark</span><span class="pun">-</span><span class="pln">master sudo systemctl enable spark</span><span class="pun">-</span><span class="pln">master</span>

第5步。配置防火墙。

首先,您需要确定Apache Spark用于其各种组件的端口。通常,您应该打开的基本端口是:

- Spark Master Web UI:端口 8080(或已配置的端口)

- Spark 主端口:7077(或已配置的端口)

- Spark 工作线程端口:指定范围内的随机端口(默认值为 1024-65535)

若要打开 Spark Master 和 Web UI 端口(例如 8080 和 7077),可以使用以下命令:firewall-cmd

<span class="pln">sudo firewall</span><span class="pun">-</span><span class="pln">cmd </span><span class="pun">--</span><span class="pln">zone</span><span class="pun">=</span><span class="kwd">public</span> <span class="pun">--</span><span class="kwd">add</span><span class="pun">-</span><span class="pln">port</span><span class="pun">=</span><span class="lit">8080</span><span class="pun">/</span><span class="pln">tcp </span><span class="pun">--</span><span class="pln">permanent sudo firewall</span><span class="pun">-</span><span class="pln">cmd </span><span class="pun">--</span><span class="pln">zone</span><span class="pun">=</span><span class="kwd">public</span> <span class="pun">--</span><span class="kwd">add</span><span class="pun">-</span><span class="pln">port</span><span class="pun">=</span><span class="lit">7077</span><span class="pun">/</span><span class="pln">tcp </span><span class="pun">--</span><span class="pln">permanent</span>

After adding the necessary rules, you should reload the firewall for the changes to take effect:

<span class="pln">sudo firewall</span><span class="pun">-</span><span class="pln">cmd </span><span class="pun">--</span><span class="pln">reload</span>

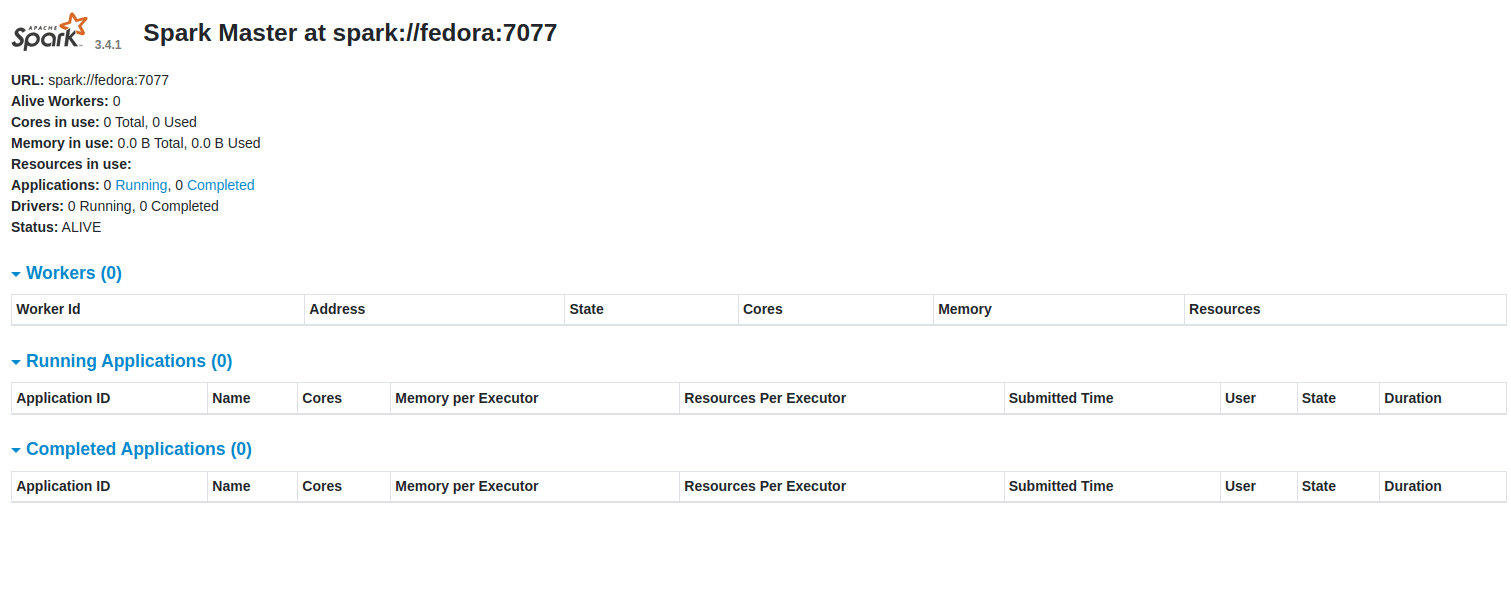

第 6 步。访问 Apache Spark Web 界面。

若要验证 Spark 是否已正确安装以及群集是否正在运行,现在请打开 Web 浏览器并通过输入以下 URL 访问 Spark Web UI:

<span class="pln">http</span><span class="pun">:</span><span class="com">//your-IP-address:8080</span>

应在以下屏幕上看到 Spark 仪表板:

感谢您使用本教程在 Fedora 38 系统上安装 Apache Spark。有关其他帮助或有用信息,我们建议您查看 Spark 官方网站。